Security engineers want speed. Legal wants control. Privacy wants restraint. Can an AI-driven response system satisfy all three? Yes—but only if it’s built with governance in mind.

Autonomous security systems sound powerful. Detection models that improve themselves. Playbooks that rewrite their logic. Incident response that unfolds in milliseconds.

But the moment you say “no human in the loop,” the room changes.

❗ “Who’s accountable if something goes wrong?”

❗ “How do we prove what happened during an audit?”

❗ “Can this system violate a user’s privacy policy?”

These aren’t just hypothetical questions—they’re the frontline concerns of your legal, privacy, and compliance stakeholders.

And if they’re not addressed head-on, your autonomous response system will never see production.

🧠 The Mental Model: Explainable Autonomy, Not Black Box Magic

To bridge the gap between capability and trust, we introduce a new model:

Governed Automation = Explainable AI + Immutable Logs + Tiered Control

It means:

- AI can act autonomously, but its logic is reviewable.

- Every decision is recorded and time-stamped immutably.

- Controls are adjustable based on incident type, severity, and stakeholder preference.

This isn’t “set it and forget it.” This is autonomy with guardrails.

🛠️ How It Works in Practice

✅ 1. Explainability Built-In

Every action a model or playbook takes is traceable to its inputs.

e.g., “Isolation triggered due to EBM score 0.91, unusual login time, and 3 failed authentications.”

✅ 2. Immutable Decision Logging

All decisions are written to a cryptographic ledger (e.g., blockchain-style append-only log) for audit.

✅ 3. Policy Enforcement Layer

Before any automated action occurs, it’s evaluated against:

- Privacy thresholds (data scope, user consent)

- Legal escalation rules (e.g., customer notification)

- SLA commitments (e.g., action time windows)

- Compliance & Assurance metrics

- Risk metrics

- Governance exceptions

- Asset and object specific classifiers

✅ 4. Tiered Automation Controls

Certain playbooks can run in:

- Full Auto: Immediate action

- Semi Auto: Action with notification

- Manual Review: AI suggests, human approves

🔍 Real Example: Privacy-Sensitive Containment

A healthcare org used the system to flag anomalous downloads of sensitive patient data.

- The system detected a pattern, scored it high-risk, and prepared a containment response.

- But before acting, the policy engine blocked auto-quarantine because the user was a clinician accessing consented records during off-hours within & across the authorized geography.

- Instead, it escalated the case for manual approval, citing HIPAA flag threshold not met.

This wasn’t just smart—it was safe.

🧩 Why This Matters to Stakeholders

| Stakeholder | Concern | AI-Based Solution |

|---|---|---|

| General Counsel | Legal exposure from auto-actions | Immutable logs, tiered approval modes |

| Privacy Officer | User rights and data handling | Policy engine enforces consent + scope |

| CISO | Risk ownership and control | Explainable AI + override access + auditability |

| Compliance Team | Regulatory reporting | Timeline view of every detection and response step |

Without these layers, autonomy is a liability.

With them? It’s a force multiplier.

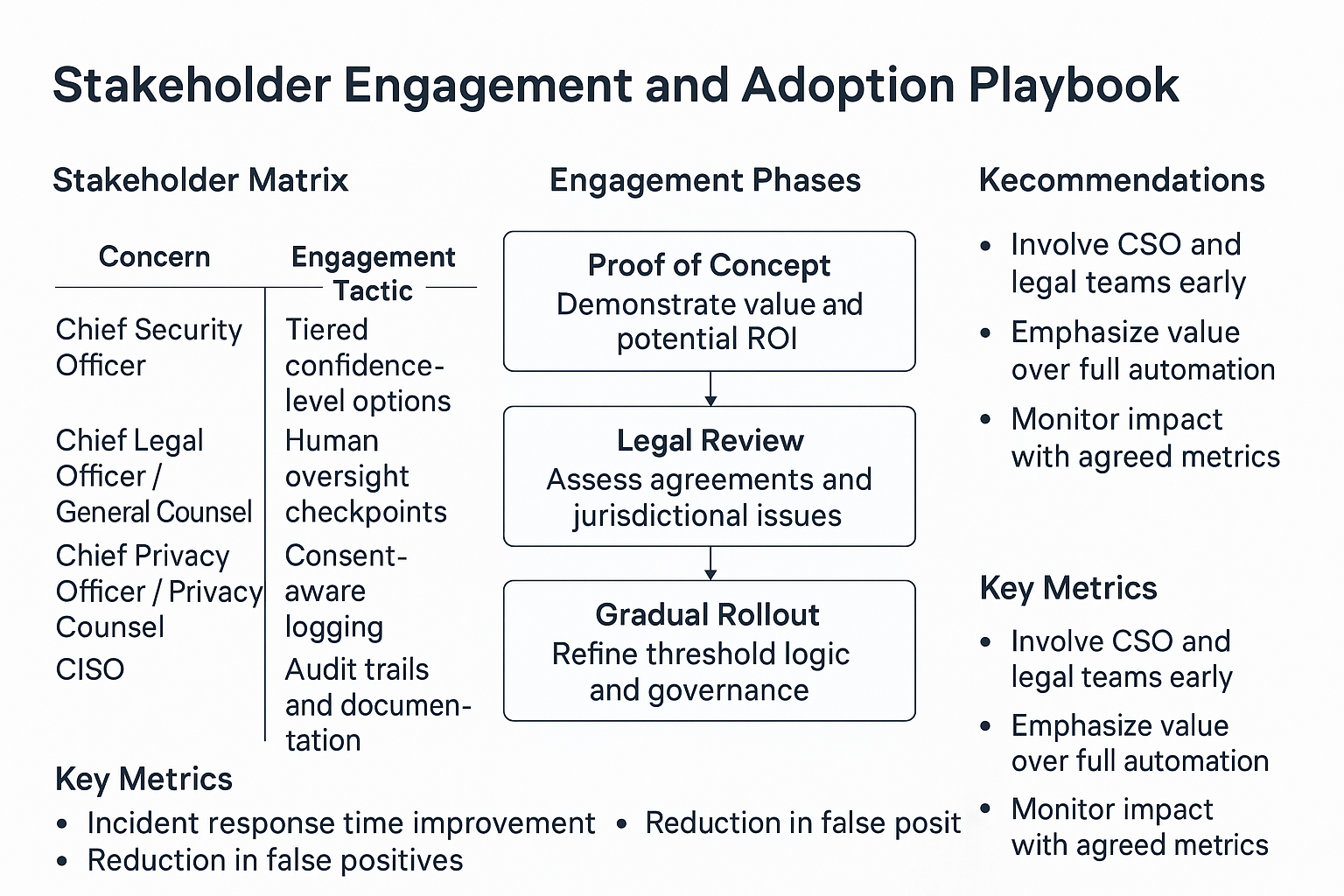

For instance, here is an overly simplified stakeholder engagement and adoption playbook.

🎯 Your Move

Ask yourself:

- Can you explain your system’s last automated response in detail?

- Could you prove to an auditor that it behaved appropriately?

- Can legal veto or shape the automation policy?

If the answer is no, you don’t have a trustworthy system. You have a ticking risk vector.

👉 Governance is what turns automation into autonomy. Don’t just build fast—build responsibly. Read the full white paper or dive into the latest podcast episode to learn more.